Changi Airport: Emergency Response Simulator

Challenge

Emergency response training is costly, time-intensive, and logistically complex, especially in critical environments like airports. There is a need for a customizable, efficient way to simulate diverse emergency scenarios without the heavy economic, ecological, and organizational burdens of physical drills.

Solution, and my Role

A VR-based fire-fighting simulation creator that allows customizable training scenarios — including vehicles, obstacles, and atmospheric conditions. I defined the user flow based on firefighters' operational needs and designed the simulator’s desktop UI, creating an intuitive interface that empowers users to easily build, customize, and deploy realistic training simulations.

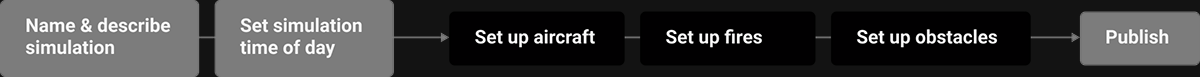

1. User flow (simulation creation)

For the task flow, we were unable to find a lot of directly related design case studies out in the wild. Nevertheless, from the aerodrome fire fighting trainings literature and discussions with stakeholders, we noted the following requirements:

- A great many variables go into creating a realistic simulation: different types of fires and extinguishing agents, fire intensities, smoke, debris, communication procedures, and so on.

- Various types of fires: aircraft fires, engine fire, internal fire, fuel line fire. Aircraft accidents typically involve fuel-spill fires (main extinguishing agent is foam, not water).

- The design must include different fire intensities to simulate realistic fire-fighting conditions.To maximize impact for efforts, we focused on the basic scenario involving fire configuration on the aircraft, loose fires placement, and obstacles setup. Mas and I sketched a journey each, and converged upon:

User Interface Design

2.1 What's out there

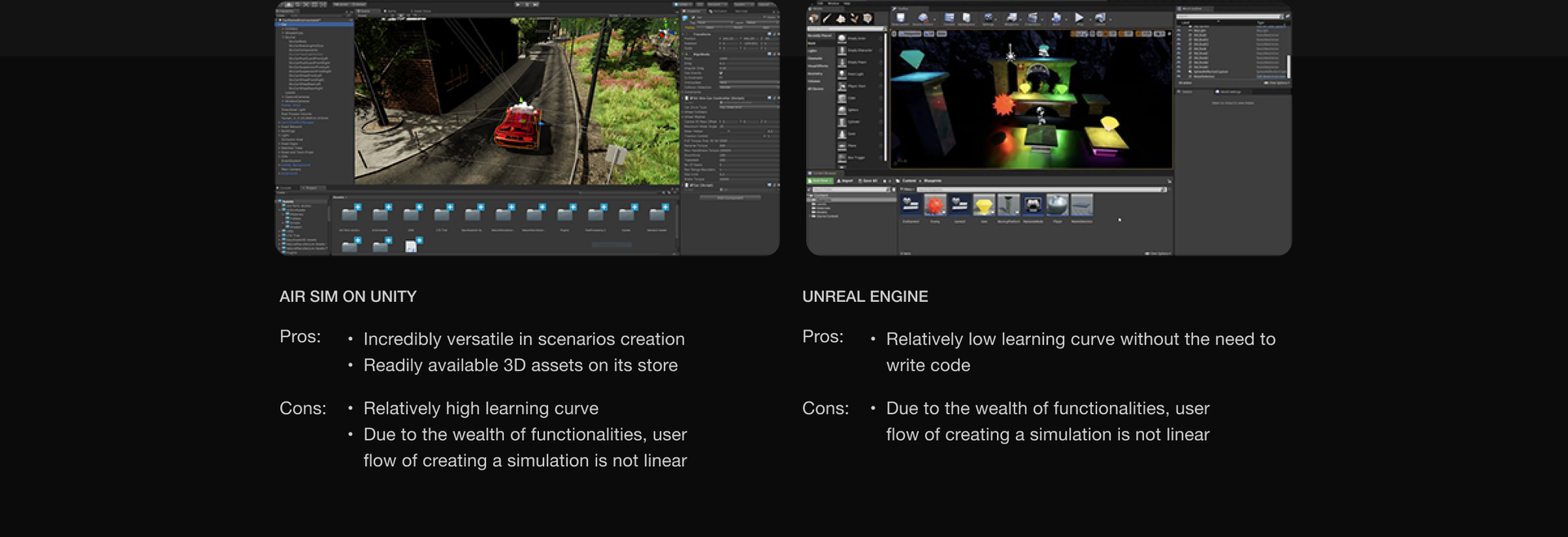

Because of the lack of access to direct competitors UI/UX designs, I looked into Air Sim (on Unity) and Unreal Engine and found that:

What struck me was that, even though Unreal had somewhat lower bar of entry, both engines take substantially longer time to set up a simulation without the proficiency of using keyboard shortcuts, due to frequently needed features being either hidden, or sitting in a clutter. Along with this consideration, I selected a few design heuristics from Norman Nielsen's list, including:

- Design should speak the user's language, e.g., the term 'scenario' (already familiar to the trainer) over 'scene' (more common with 3d modellers).

- Design should not contain information which is irrelevant at the time or rarely needed.

2.2 3D object interaction UI

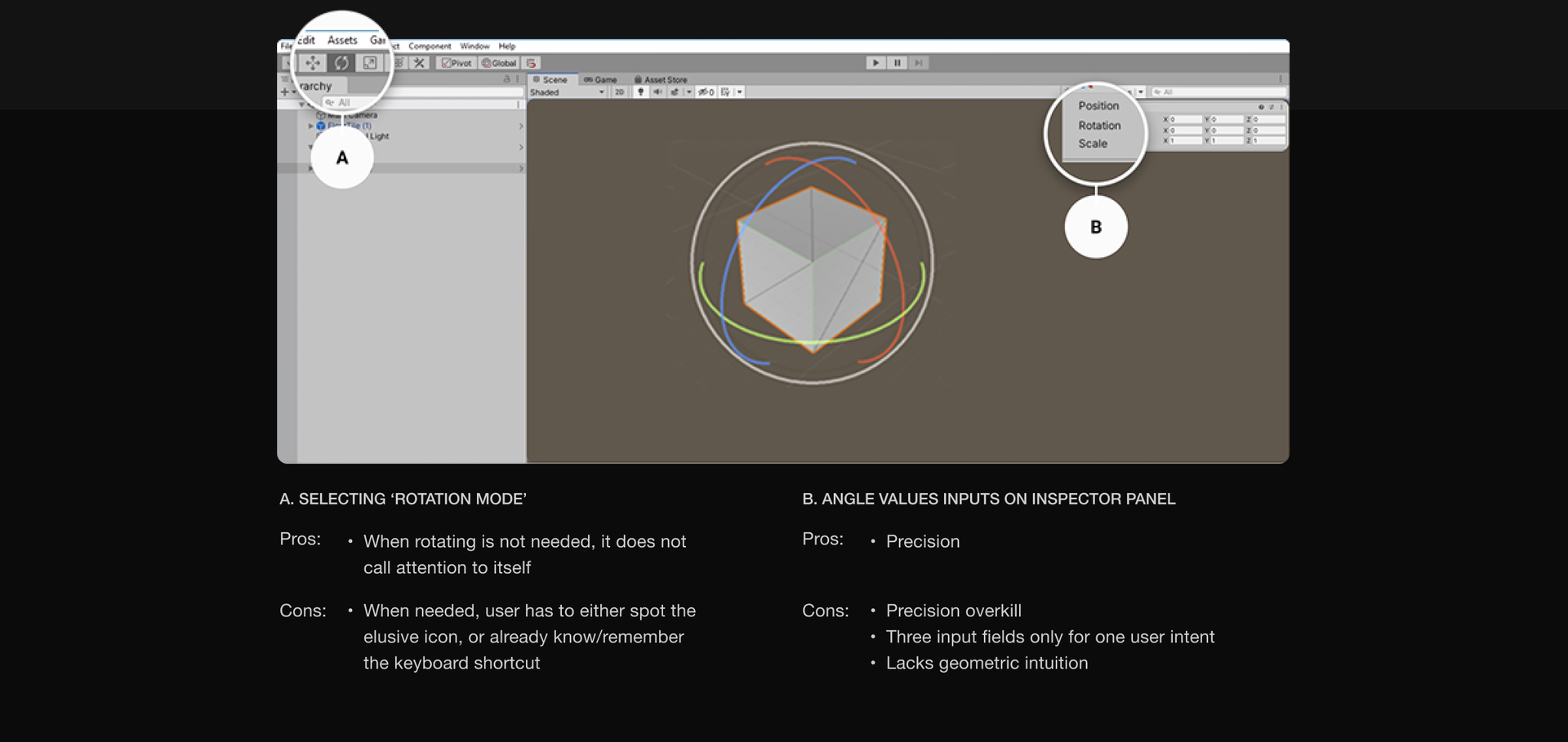

Users can choose to view the world setup in 2D or 3D mode. In 3D mode, rotating an object was not as straightforward as we thought. Let us see two ways how a user can rotate an object in Unity to grasp the problem:

Considering the disadvantages above, I designed the interaction which addresses:

- Findability: User does not need to locate a 'Rotate Mode', as is in A, and performs rotation where the object is.

- Intuitiveness: User can control degree of rotation by dragging the scroll bar (dragging on a single axis, that is, left & right, is easier to perform than dragging in a circular motion, as is in A). With this, new users do not need to learn new gestures to perform the action.

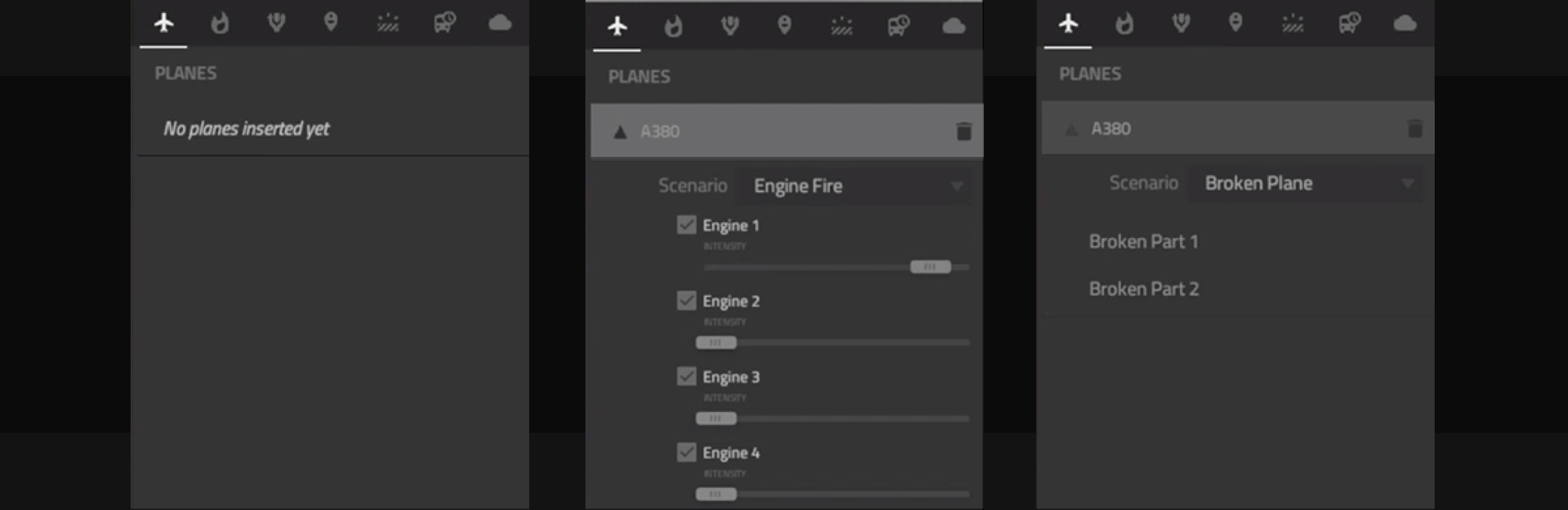

2.3 Desktop UI

The desktop user interface follows the task defined earlier with features like atmospheric conditions setup added as optional, advanced configuration.

Last but not least

The following images show the simulation in action. In VR, the training experience begins with the dispatch of trainee's vehicles headed for the emergency site, and ends with the fires extinguished successfully.