VR Prototype: Echolocation

Challenge

Player avatars are often human or anthropomorphized characters, which limits the game narrative and user interactions and gestures conceived. How might we design a game narrative and user interaction which better immerses the player in the virtual world?

Solution

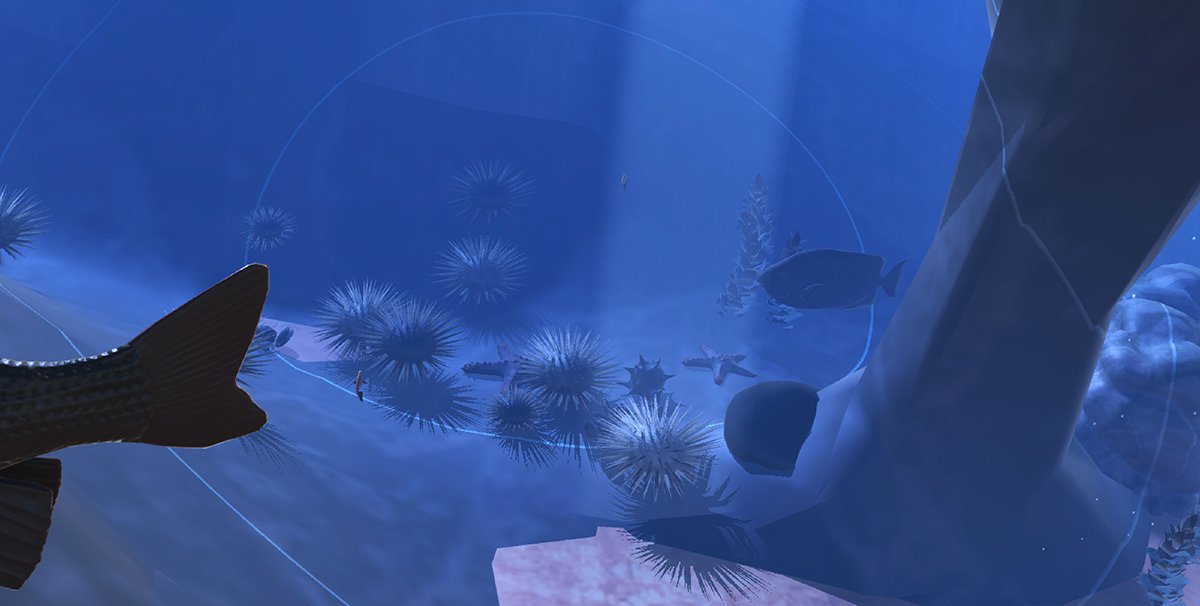

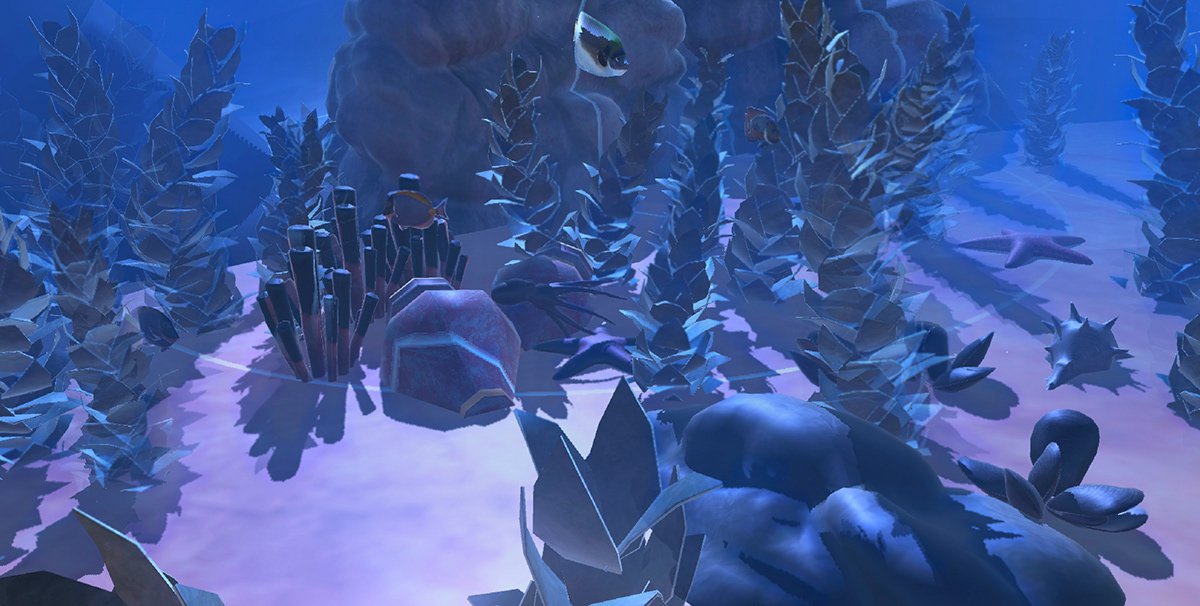

A VR experience in which the player is a dolphin trying to echo-locate an elusive octopus for pre-lunch snack. As the water visibility is poor, the player can invoke echolocation by fixing their gaze and uttering the word "Click!" to see faraway objects.

I prototyped the interactions using Unity for Google Cardboard and used Watson speech-to-text and assistant services for the user voice input implementation. Also, I conducted a usability testing session with a colleague.

1. Ideation Phase

To generate ideas for novel game narratives and user interactions, I began by identifying activities which could make interesting user interactions in VR and classifying them according to:

- Activities humans might perform: bird-watching through binoculars, cow-milking, tabla-playing

- Activities non-humans might perform: bacterium dividing itself, a puffer-fish inflating, mythological Hydra losing and growing new heads

I settled with dolphin avatar and echolocation as it offers an interesting way to learn about the animal.

2. Design & development

2.1 Way-points navigation

For navigation within scene, I employed the standard gaze-and-click to eliminate motion sickness. This is crucial as, whilst diving in the open water, it is easy for humans to lose their sense of space. As a preemptive measure, I added many more non-moving underwater objects such as sea weed, corals, and boulders serving as visual anchors for the player.

2.2 Voice interface

I experimented with the cloud-based Watson speech-to-text service which registers 'intents'. When a player utters the word "Click!", in-game water visibility improves temporarily.

To improve the service response time, I lowered the minimal keyword confidence level and reduce the waiting time following the the utterance of "Click!". Other keywords which rhyme with "Click!" such as "Lick" and "Flick" were also selected for a more forgiving interface.

3. Evaluation Phase

I conducted a usability study with a colleague already familiar with way-points navigation in VR and read him the following task:

"You're a dolphin with the ability to echo-locate in murky waters. Find yourself an octopus for a snack and please share your questions or thoughts you may have as you complete the task."

- "Oh the sonar lines' neat."

- It's crucial to strike a balance in difficulty when designing games — the task, i.e. the number of objects to check, shouldn't be so easy that it feels mindless, nor so hard that the player feels frustrated and gives up."

- 'Is something supposed to happen?' as the user said 'Click!' but experienced delayed feedback.

Using Watson Speech-to-Text and Assistant triggered two separate service calls, putting extra strain on an already slow network. In hindsight, relying on the service overcomplicated things — I should have just hardcoded a simple verbal keyword recognizer instead.

3.2 Retrospection and future exploration

Creating the prototype and the usability study led to more ideas I'm excited about and learnings:

- Unity has its own Phrase Recognition, which recognizes specified keywords with a much faster response time.

(Just what I need for the simple game!) - Map displaying areas already explored

- Reduce the amplitude of the sonar rings to correspond with nearer objects.

- Variations of "Click" utterances such as burst-mode and sensitivity to user's pitch.

- Have the player avoid sharks by echo-locating stealthily.